In the update section of my previous article on advanced location and camera direction tagging of videos, I've already mentioned the built-in video recorder of Ubipix, currently, the only iOS application to record truly dynamic, almost (metadata is sampled every second) frame-level location and direction info, doesn't really have a decent video recorder.

For example, as has been explained in the article, it's not possible to directly instruct the app not to switch on the torch in low-light situations, unlike with the default, stock Camera app's video recorder. Not mentioned in the article, but certainly visible in both of my Iisalmi fireworks videos published the day before yesterday (HERE and HERE – Ubipix links), the continuous autofocus pretty much messes up videos like those of fireworks. While the stock Camera recorder also uses continuous autofocus, several third-party recorders allow for fully manual focusing, which makes it possible to avoid those really ugly focus hunts.

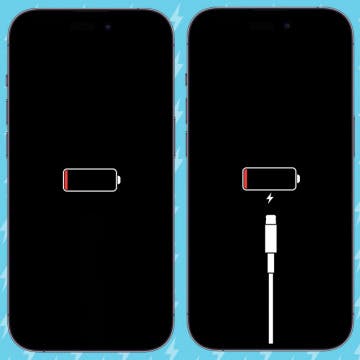

Fortunately, with the new version of my now-Ubipix-compliant logger, it has become possible to use any other app to record your videos in, while running the logger on the same iPhone (3G iPad). Of course, you can run the logger on any other iOS device. Also, as has been explained in the original article, you can use any non-iOS camera (e.g., a GoPro action / sports camera) – just make sure you synchronize the internal clock of the camera to that of the iPhone logging the location / direction info.

My logger

First, my recorder. As usual, it's available as a freely compilable and deployable Xcode project. I've created a completely new Xcode 4.x project to flawlessly incorporate, among other things, Automatic Reference Counting (ARC; tutorial). The full Xcode project, ready for deployment, is HERE. Feel free to deploy it on your iDevice. Hope you find it useful (and/or the source code instructive).

Switching to ARC has resulted in having to declare the CLLocationManager instance (so far, a local variable) as a strong property; otherwise, it would just stop being existing after a second or two. ARC, on the other hand, has saved me from having to manually retain / release the now-global location (CLLocation) / direction (CLHeading) instances assigned in the two callback methods, - (void)locationManager:(CLLocationManager *)manager didUpdateLocations:(NSArray *)locations and - (void)locationManager:(CLLocationManager *)manager didUpdateHeading:(CLHeading *)newHeading, respectively. (Note that the former is also updated to conform to Apple's recommendation for iOS 6+: it no longer uses the now-not recommended, old locationManager:(CLLocationManager *)manager didUpdateToLocation:(CLLocation *)newLocation fromLocation:(CLLocation *)oldLocation callback. Dedicated discussion also HERE).

Note that in the first, non-Ubipix-specific version of my app, I didn't need to use any global variables (or properties) because I did all the direct file writes from CLLocationManagerDelegate's callback methods. However, Ubipix's data format can't be output from those two methods for the following reasons:

- a single record must be created every second, independent of whether there's any change or not.

- both location and direction data must be provided in these records, meaning there must be a way of communicate the last-used values to the other callback method so that they both can be output at the same time

- there is no way of accessing location / direction info synchronously, that is, from a method called via a timer, meaning we absolutely must use CLLocationManagerDelegate's callbacks.

This is why I needed to create two new global variables, CLLocation * savedLocation and CLHeading *savedHeading. Both are set from the CLLocationManagerDelegate callbacks. Without using ARC, they would have to be retained in the callback methods so that they still live when the timed callback (here, mainTimerCallback) is executed and accesses them. The latter, however, would need to release them to avoid memory leaks. The fact that I also need to copy the location reference to a backup variable (CLLocation * prevTrueLocation) to be able to output the previous value when location info becomes unavailable after a while for some time would have made releasing much more complicated. This means the ARC approach did introduce seriously simplified code (except for having to declare the, before switcing to ARC, strictly local CLLocationManager instance).

This has already been mentioned, but it's indeed worth dedicating a full paragraph to. In the previous version of the logger, I simply didn't export anything while I didn't have any meaningful location (and heading) information. Ubipix's logging format, however, requires outputting records from the first second. As one may not have any meaningful location info in the first few seconds, in the mainTimerCallback callback method's large conditional if expression, I just return doing nothing (other than just increasing the counter of the current second, currSecondIndex, which is necessary when, after first acquiring a usable location data, outputting the same location info for all the seconds before). (Note that I've entered a lot of remarks in this method explaining why the different conditional branches were needed and when does the execution enter them.)

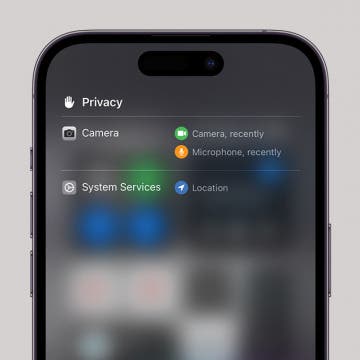

Should you just output an empty CLLocation for currently-unknown locations, Ubipix's server will consider it having been shot at the true 0,0 Earth coordinates as in the following screenshot (as usual, click for the original, better-quality one!):

The recorded tracks are named the same way as in the previous version.

Note that I've used the standard [NSTimer scheduledTimerWithTimeInterval] for setting up the repeated callbacks. It makes it possible to call back non-CLLocationManagerDelegate methods. Should you delete the NSTimer-based setup and switch to the [CADisplayLink displayLinkWithTarget] above it (commented out), it'll also call back (even when running in background) but only when there's something on the screen. Should you want to use the app as a generic logger (mostly with the screen off), you won't need to change anything. Should you, on the other hand, want to use the logger for background tracking strictly for videos recorded on the same iPhone, you might want to enable the CADisplayLink-specific code (and disable the NSTimer instruction) – after all, it'll help reduce the log file size as nothing will be logged when you suspend the device.

Using the logger

Let's see how the logger should be used with a video recorded on the same iPhone. (With an external camera, you'll need to synchronize the clocks and/or convert to a Ubipix upload-compliant format first.)

First, you'll need the exact timestamp of the start of the recording. Unfortunately, VLC or QuickTime Player don't display this. A screenshot of the latter:

However, the free and absolutely excellent MediaInfo does:

(note the annotated part).

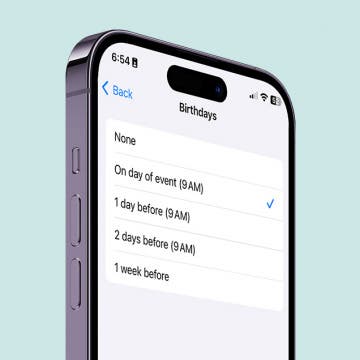

It's this info (NOT the “Tagged date”) that you'll need to convert into Unix time. For this, use for example the online converter at http://www.onlineconversion.com/unix_time.htm . Just fill in the “Convert a Date/Time to a Unix timestamp” fields (annotated with red below) and click the “Submit” button. The result you'll need to look for will be displayed in the textfield immediately below the buttons. I've annotated it with a green rectangle in the following screenshot:

(Note that UTC times need to be entered, not the local - here in Finland, EET - ones. This is why I've substracted two from hours.)

Just copy it to the clipboard, open the output of my logger and search for the value. There will most probably be only one hit:

It's up until the previous record (in the screenshot below, of vsec 70) that you'll need to delete records from the track log – and, from the end of the video as well. (For example, if it's a two-minute video, you'll need to delete records starting with vsec 71+2*60+1 = 192.)

After this, you can already upload the two files (the original video recording and the edited track file) to Ubipix's server via myTracks > Upload Recording (direct link).

An example

Among other videos, the one HERE (Sonkajärvi, Finland) has been processed exactly this way, with my logger and the stock Camera app.

UPDATE (04/01/2013): after talking to the developers of the app, I've found out you can save the original videos (converted to WebM playable by, among other players, VLC) with some manual work.

Basically, all you need to do is switch to the source code view. In Firefox, you'll need to right-click anywhere (but not on an active compoentn) on the page and select “View Source Page”.

You'll be shown the source window, where you'll need to look for “webm” to quickly find the video. The second hit will be an already right-clickable link:

(The shot also shows I did search for "webm")

Just right-click the link and select “Copy Link Location”. You can already copy the just-acquired URL to a new browser tab, where all you'll need to do is directly saving the current page (Cmd + S on a Mac).

In Chrome, you can even easier find the URL (searching for “webm” in the source window will work just fine there too). Right-click the video and select “Inspect element” (highlighted in the following screenshot):

Navigate one or two rows upwards to the long <video> tag (highlighted below):

Click the link there. You'll be presented this:

Select “Open Link in new Tab” (highlighted below):

In the new tab, you can just press Cmd + S to directly save the video (as you'd do in Firefox):